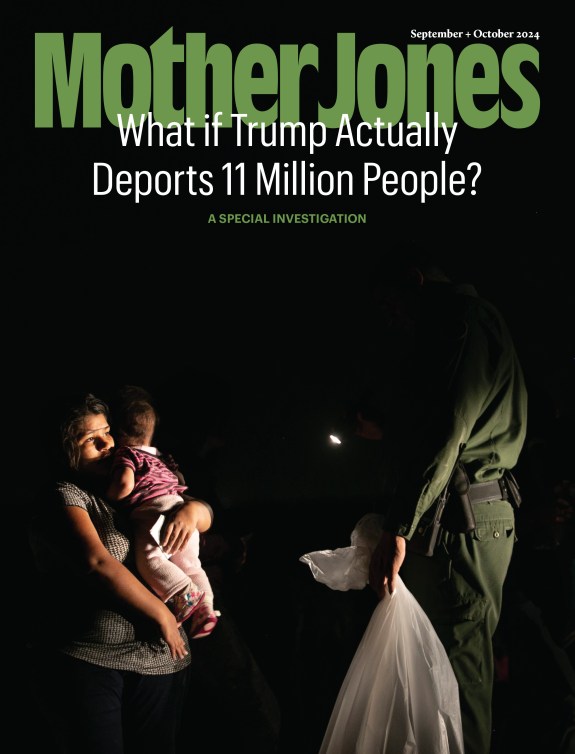

An AFP journalist views a "deepfake" video on January 25, 2019, in Washington, DC. Alexandra Robinson/AFP/Getty Images

Long-frustrated efforts to crack down on harmful content on social media sites may finally get some momentum thanks to an unlikely source: counterfeit videos known as “deepfakes” that experts worry could undermine democracies around the world.

The House Intelligence Committee held its first-ever hearing on deepfakes Thursday, probing the implications of AI technology that can create realistic, counterfeit videos. At the hearing, members of Congress, including committee chairman Adam Schiff (D-Calif.), argued that deepfakes could be justification for altering laws that exempt technology companies from legal liability for harmful content on their platforms.

“If the social media companies can’t exercise the proper standard of care when it comes to a variety of fraudulent or illicit content, then we have to think about whether that immunity still makes sense,” Schiff said. He was referring to Section 230 of the Communications Decency Act of 1996, which gives internet companies immunity from liability for harmful content on their sites. Section 230 was a bipartisan effort, intended to “encourage the unfettered and unregulated development of free speech on the internet,” as one judge said later.

If lawmakers significantly alter Section 230, it could be one of the first cracks in the dam of relative inaction on regulating the tech industry.

Lawmakers have expressed concern over the potential damage deepfakes could do. With the technology, someone could make a fake video of Jeff Bezos saying something he never actually said that would cause the share price of Amazon to tank. Groups could undermine elections by issuing fake statements from candidates and politicians. Across the world, in countries like Myanmar and Gabon, the mere potential of deepfakes is already causing confusion.

On Thursday, several members of Congress asked expert witnesses to weigh in on changing the regulation. “I just want to ask each of you whether we should get back to the requirement of an editorial function and a reasonable standard of care by treating these platforms as publishers,” said Rep. Peter Welch (D-Vt.) “That seems like one fundamental question that we would have to ask because that would be a legislative action.”

Schiff told reporters after the hearing that he’s open to working with other committees on these potential legislative changes.

“The Intelligence Committee has a unique perspective and concern about this issue because of the prospect of foreign interference and manipulation,” he said, noting that other committees will have different but relevant interests in reforming Section 230. “So I think this is an oversight issue for several committees—a legislative issue for several committees. And we’ll be working with our colleagues to determine the right response.”

While Republicans and Democrats in Congress agree that technology companies need to reform their content moderation practices (though they don’t necessarily agree on how), a small number are concerned about unraveling it in potentially damning ways. That includes Sen. Ron Wyden (D-Ore.), an original sponsor of the legislation that included Section 230. Wyden opposed 2018 legislation that reformed Section 230 to make technology companies liable for sex trafficking content. The measure ended up hurting sex workers by taking away avenues for them to advertise online and forcing many onto the streets, into the hands of exploitative pimps. Wyden has said he’s worried future reforms to Section 230 could cause other unforeseen problems.